App Store Optimization (ASO) in the Age of Intelligent Discovery

App Store Optimization (ASO) used to be a craft dominated by keyword spreadsheets, clever titles, and a steady cadence of creative tests. That era isn’t gone but the ground is shifting beneath it. App discovery is becoming “intelligent”: ranking and recommendation systems are increasingly driven by machine learning; on-device signals personalize results; multimodal search is arriving; and generative AI is rewriting how stores present, summarize, and even compose metadata. If you’re still operating with a 2019 playbook, you’ll get outpaced by products that feed these new systems the signals they crave. This guide explains what “intelligent discovery” means, how it is reshaping ASO, and what to do about it today. You’ll learn the new ranking levers, how to structure your testing roadmap, which data to collect, and how to evolve your team’s skills for the next five years.

1) From “Keywords + Clicks” to “Signals + Systems”

Yesterday’s ASO model: Find high-volume keywords, place them in the title and subtitle, localize, earn installs, and iterate creatives. Rankings were influenced by text relevance and install velocity.

Today’s intelligent model: App stores and mobile ecosystems use multi-signal models. Keywords still matter, but they’re just one input among many, including:

-

User satisfaction signals: Retention, uninstall rate, crash rate, average rating, rating trajectory, review sentiment, and engagement depth.

-

Personalization signals: On-device interests, past installs, subscription likelihood, device category (e.g., tablet vs. phone), even geotemporal usage patterns.

-

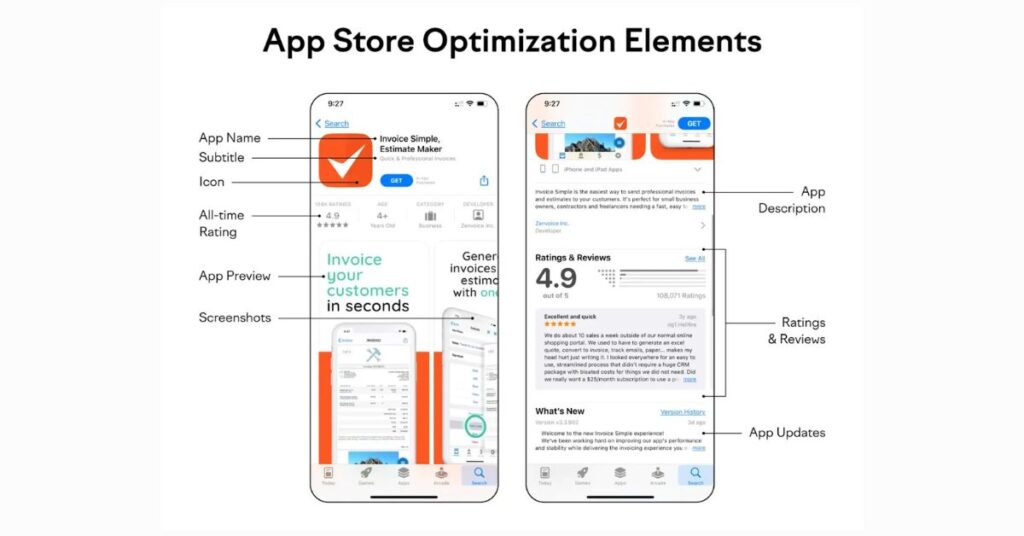

Creative comprehension: Models evaluate your icon, screenshots, and preview videos for clarity, theme, and relevance to the query or user cluster.

-

Entity understanding: Stores map your app to topics (“guided meditation,” “budgeting for freelancers”) rather than only keywords, and match queries to entities.

Implication: ASO is now product-market fit analytics, creative communication, and machine learning supply chain. The “supply” you provide to ranking systems is your metadata, creatives, behavioral outcomes, and audience coherence. Optimize all of them.

2) The New Ranking Levers You Can Actually Influence

You can’t control the algorithm, but you can improve the signals it reads.

a) Text Relevance 2.0 (Entities, Not Just Terms)

-

Build a topic lattice: list the core user problems your app solves, then map each to query families (short-tail, mid-tail, long-tail) and to store categories and editorial collections.

-

Write metadata that speaks to user intents (“learn Spanish fast”, “sleep better tonight”) instead of stuffing synonyms. Cohesive intent signals help models correctly classify your app’s entity space.

b) Behavioral Quality

-

Track Day 0, Day 1, Day 7 retention, time-to-value, and core action completion rate from store traffic cohorts. If store-sourced users fail to activate, relevance decays.

-

Reduce uninstall-after-first-open with shorter onboarding, clear empty states, and “aha” scaffolds (tooltips, smart defaults, templates).

c) Ratings and Sentiment Trajectory

-

Intelligent systems weigh not just your average rating but also velocity (how fast ratings improve) and recency (what last month looks like). Launch just-in-time review prompts triggered by genuine success moments, and implement a sentiment deflection loop (in-app support, graceful error handling) to prevent negative reviews.

d) Creative Comprehension

-

Treat icon, screenshots, and preview video as machine-readable assets. Ask: “Can a model infer my category, value prop, and target audience from a glance?” Use headline-on-image frameworks (“Track Every Expense in 10 Seconds”), real UI context, and consistent brand motifs so models and humans connect the dots quickly.

e) Cohort Coherence

-

Acquisition that is too broad can confuse personalization systems. Tighten paid UA and web-to-app so that the people you bring look like the people you keep. That consistency improves downstream recommendation eligibility.

Multimodal and Conversational Search Are Coming to Stores

Search is expanding beyond typed keywords:

-

Natural-language prompts: “I want a budget app that syncs with my bank and splits bills with roommates.” Your metadata and creatives must mirror these statements.

-

Voice queries: Short, intent-rich phrases (“learn piano basics this weekend”) emphasize outcomes over features.

-

Image cues and video previews: Store surfaces increasingly show richer, story-like modules that models can parse.

Action: Add a “prompt bank” to your ASO process. Collect real phrases from support tickets, social posts, and user interviews. Fold them into subtitles, short descriptions, captions on screenshots, and preview video scripts. The goal is semantic overlap with how humans actually ask.

Generative AI Inside the Storefront

Generative systems are appearing in app stores as:

-

Generated summaries of features and reviews.

-

Auto-suggested queries and category overviews.

-

Dynamic bundles (“Apps to Get Better Sleep This Week”) that reflect emerging trends.

Your job is to feed them better input:

-

Metadata that summarizes well: Use crisp sentence structure, front-loaded benefits, and consistent terminology. Avoid jargon; generative summarizers reward clarity.

-

Reviews that narrate outcomes: Encourage users to mention specific wins (“cut my grocery bill by 20%”). These lines become the raw material for store summaries.

-

Feature naming discipline: Use steady, descriptive names for flagship features to stabilize how generative systems label your app.

Privacy Shifts and the New Attribution Reality

Post-ATT and with rising privacy protections, store ecosystems lean harder on on-device and first-party signals. That means:

-

Context beats tracking: Lean into declarative context (metadata, categories, captions) and behavioral proof (retention, engagement).

-

Server-side truth: Build a first-party analytics backbone to understand store cohorts without relying on invasive identifiers. Cohort-based KPIs and incrementality tests matter more than ever.

Keyword Research Evolves But Doesn’t Disappear

Traditional keyword tools still help you prioritize opportunities, especially for “head” queries. What changes:

-

Query families over single terms: Cluster synonyms and variations into intent packs (e.g., “budget planner,” “expense tracker app,” “money manager for students”). Optimize for the family with unified messaging.

-

Emergent intent mining: Scrape your own reviews, competitor reviews, Reddit threads, and Q&A forums to detect rising phrases (“zero-based budgeting,” “envelope method,” “AI receipt scan”). Update metadata quarterly to reflect emergent entities.

-

Local vernacular: Intelligent discovery respects regional phrasing. Localize beyond translation capture slang and cultural references that align with how locals ask.

Creatives: Designed for People, Readable by Machines

Icons: Simplicity scales. Use a single dominant shape or letterform that telegraphs your category. Small-screen and dark-mode mockups are mandatory signoffs.

Screenshots:

-

Frame #1: Outcome headline + UI in action (“Fall Asleep in 5 Minutes” with a visible sleep timer).

-

Frames #2–#5: Use-case path (discover → act → result), not random features.

-

Microcopy that mirrors search intent: reuse phrasing from your prompt bank.

Preview video:

-

First 3 seconds: show the moment of value (e.g., receipt camera scanning accurately).

-

Add subtitles. Many users (and models) “watch” muted.

-

Keep to one storyline; resist kitchen-sink assemblies.

A/B testing: Test message architecture (problem → promise → proof → path) before color or layout tweaks. Machines are more likely to understand consistent structure.

Ratings, Reviews, and Sentiment Intelligence

Intelligent discovery heavily weights sentiment clarity:

-

Prompt placement: Trigger review prompts after a success event (completed workout, language lesson streak). Never after interruptions like paywalls or crashes.

-

In-app deflection: Offer quick support or bug report paths before prompting unhappy users for public reviews.

-

Thematic reply templates: Respond to reviews by cluster (bug, pricing, content request). Use replies to reinforce entities (“We’ve added zero-based budgeting and shared envelopes in v2.3.”).

-

Review mining loop: Feed common phrases back into your text metadata and screenshot captions.

Localization for Intelligent Systems

Go beyond translation:

-

Market-fit messaging: In some regions, “free offline” beats “AI-powered”; in others, “privacy-first” is the headline. Tailor top-frame benefits per market.

-

Cultural screenshots: Use date, currency, and content examples that feel local.

-

Regional feature gates: If certain features aren’t available (e.g., bank sync), don’t advertise them in that locale; mismatch hurts satisfaction signals.

Alternative Stores and Device Surfaces

Discovery now spans:

-

Alternative Android stores (regional ecosystems, OEM stores).

-

Wearables, TVs, cars: Cross-surface relevance is rewarded when the app experience and metadata are coherent.

-

Web-to-App and App Clips / Instant Apps: Lightweight entry points seed strong activation signals that can boost store perception of fit.

Action: Maintain surface-specific metadata (e.g., concise copy for wearable listings) and ensure the first-run flow is optimized per device.

ASO + SEO + Paid UA Convergence

Users bounce between the web and stores. Intelligent discovery systems observe this dance:

-

Consistent narratives: Align your website’s H1, schema markup, and feature descriptions with store metadata. Semantic consistency supports entity understanding across surfaces.

-

Paid signals that help ASO: Tight interest targeting improves cohort coherence, which in turn improves post-install outcomes and recommendation eligibility.

-

Deferred deep links: Smooth handoffs from ads and web to the right in-app destination improve time-to-value protecting your behavioral signals.

Measurement for the Intelligent Era

Move from vanity metrics to signal-aware KPIs:

-

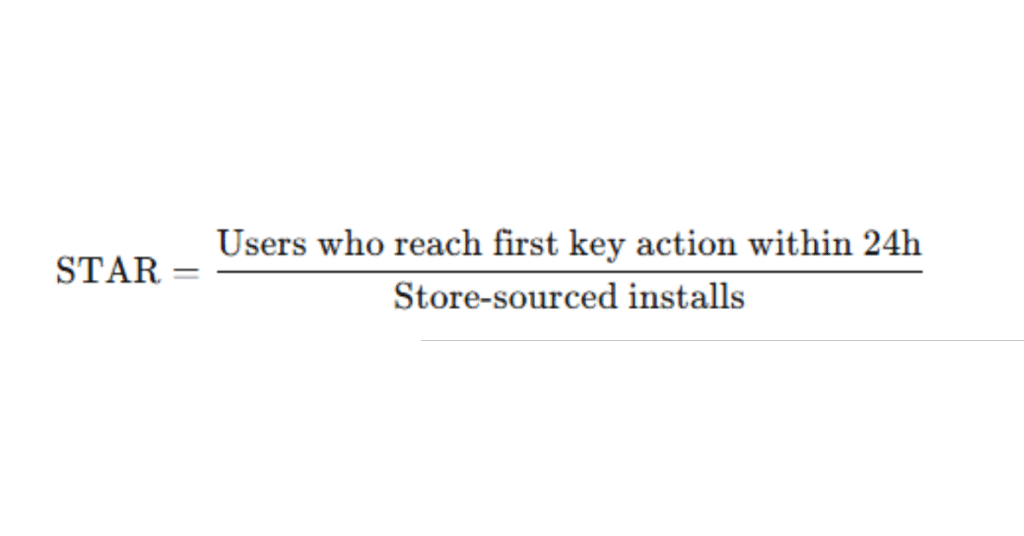

Store Traffic Activation Rate (STAR):

This approximates the “value realization” models watch.

-

Review Sentiment Momentum (RSM):

Weighted index of ratings recency × average star delta × positive keyword frequency. Track weekly. -

Creative Comprehension Score (CCS):

Internal rubric: Can a new person infer category, core value, and audience in 5 seconds from icon + first screenshot? Score 0–10. Improve until ≥8. -

Cohort Coherence Index (CCI):

Variance of core retention metrics across acquisition sources. Lower variance = healthier, more predictable signals. -

Query Family Coverage (QFC):

% of priority intent packs with explicit mention in metadata and at least one screenshot caption.

These aren’t official store metrics, but they align your team with what modern systems value.

Experimentation: Faster Cycles, Smarter Bets

Cadence: Ship metadata or creative tests every 2–4 weeks per locale. Small, consistent improvements compound.

Design experiments around hypotheses:

-

“Users searching ‘sleep better tonight’ respond to outcome-first framing” (vs. feature-first).

-

“Students in LATAM prefer price transparency in the first two screenshots; this will lift STAR by 3–5%.”

Runbook:

-

Define primary KPI (e.g., store conversion rate) and guardrails (rating stability, Day 1 retention).

-

Launch one variable change per test (headline, first screenshot narrative).

-

Hold for a full weekly cycle to smooth weekday/weekend effects.

-

Roll forward winners; archive learnings in a Message Bank.

Data, Tooling, and the ASO “Brain”

To compete, you need an ASO brain a living system of truth:

-

Data lake: Store store-sourced cohort metrics, creative versions, metadata snapshots, and test outcomes. Tag everything by locale and date.

-

NLP pipelines: Classify reviews, extract intents, and monitor sentiment drift. Feed top phrases back into copy and creatives.

-

Creative version control: Keep a clean changelog with thumbnails and performance deltas for every test.

-

Alerting: Trigger alerts when RSM dips, uninstall-first-day spikes, or conversion drops after an OS update.

Team Skills for the Next Five Years

Tomorrow’s ASO team looks different:

-

Product-minded ASO lead who understands activation funnels, not just keywords.

-

Creative strategist fluent in storytelling and visual hierarchy, comfortable designing for machine comprehension.

-

Data analyst who can cluster queries, build basic NLP classifiers, and run cohort analysis.

-

Localization strategist with cultural intelligence, not merely translation.

-

Lifecycle marketer to shepherd store-sourced users through onboarding to first value.

Invest in cross-training: teach designers ASO principles and teach ASO managers funnel analytics.

Ethical and Governance Considerations

Intelligent discovery rewards good experiences and punishes dark patterns. Keep your playbook clean:

-

Truth in advertising: Don’t show features you don’t have or overstate outcomes. Misalignment produces bad reviews, which models will notice quickly.

-

Privacy by design: Make consent flows clear and optional features explicit. Ethical practices reduce churn and improve sentiment.

-

Accessibility: Legible text in creatives, voiceover-friendly previews, and contrast-friendly color choices help real people and improve machine readability.

A 90-Day Plan to Modernize Your ASO

Days 1–15: Baseline & Strategy

-

Audit metadata for intent coherence; map your topic lattice and query families per locale.

-

Build initial prompt bank from reviews, support tickets, and social mentions.

-

Gather KPIs: STAR, RSM, D1/D7 retention (store cohorts), uninstall-after-first-open, conversion rate.

Days 16–30: Creative Re-Architecture

4. Redesign icon and first two screenshots around the outcome-first message. Add clear, human-readable captions.

5. Script a 15–20s preview video with the “moment of value” in the first 3 seconds.

Days 31–45: Sentiment & Onboarding

6. Implement just-in-time review prompts tied to success events; add in-app deflection for unhappy users.

7. Simplify onboarding, cut one step, and auto-fill defaults to reduce time-to-value.

Days 46–60: Localization & Cohorts

8. Localize messages, not just words: adapt headlines to local priorities; replace screenshots with local currency/date examples.

9. Align paid UA with your best-retaining cohorts to improve cohort coherence.

Days 61–75: Testing Engine

10. Launch your first two A/B tests (headline wording; first screenshot narrative).

11. Stand up a simple NLP review miner to track rising intents.

Days 76–90: Feedback Loops

12. Roll forward winners, document learnings in the Message Bank.

13. Refresh metadata to include any emergent entities discovered.

14. Set monthly rituals: metric review, creative roadmap, localization tune-ups.

Tactical Checklists

Metadata Checklist

-

Title/subtitle front-load the main outcome.

-

Short description mirrors at least two phrases from the prompt bank.

-

Feature names are consistent across website, in-app, and stores.

-

Localization adapts benefits to market priorities.

Creatives Checklist

-

Icon communicates category and is legible at small sizes.

-

First screenshot states the promise; subsequent ones show the path.

-

Captions are succinct, legible, and intent-aligned.

-

Preview video shows value in ≤3 seconds; includes subtitles.

Sentiment Checklist

-

In-app success triggers review prompt.

-

Negative experience routes to support, not the store.

-

Review replies reinforce entity terms and roadmap transparency.

Measurement Checklist

-

STAR, RSM, CCI tracked weekly.

-

A/B test log with hypotheses and outcomes.

-

Review NLP running; emergent intents fed into metadata quarterly.

Case-Style Scenarios

Scenario A: Sleep App Plateau

-

Problem: Conversion stable, rankings slipping.

-

Move: Reframe creatives from “sounds & playlists” to “fall asleep in 5 minutes” outcome; tighten onboarding to deliver first guided session in 30 seconds; prompt reviews after two successful nights.

-

Result: STAR up 7%, RSM positive, regained recommendation slots for “insomnia help.”

Scenario B: Budget App Expanding to LATAM

-

Problem: Translation done, installs up, retention down.

-

Move: Replace first screenshot headline with “control gastos sin conexión” (offline control), local currency in UI, defer bank-sync message until after first manual entry.

-

Result: D1 retention up 12%, review sentiment shifts from confusion to clarity.

Scenario C: Fitness App With Broad UA

-

Problem: High install volume, poor personalization performance.

-

Move: Narrow paid targeting to strength-training interests; creatives emphasize “progressive overload” entity; metadata highlights strength programs over generic “workout.”

-

Result: Cohort coherence improves; recommendation impressions increase in “weightlifting” clusters.

What to Stop Doing

-

Keyword stuffing divorced from user intent.

-

Feature salad screenshots with tiny, unreadable text.

-

One-size-fits-all localization that ignores cultural priorities.

-

Chasing installs at any cost it pollutes your behavioral signals.

-

Irregular testing fast-moving ecosystems punish stagnation.

What to Start Doing

-

Build an ASO brain: message bank, prompt bank, review NLP, and a clean changelog.

-

Optimize for time-to-value as a first-class ASO metric.

-

Craft creatives that a person and a model can understand in five seconds.

-

Treat reviews as metadata: mine, reply, and recycle language.

-

Align SEO, ASO, and paid UA under one narrative.

Conclusion

The future of ASO is not a trick; it’s a system. Intelligent discovery rewards apps that express a clear promise, deliver value quickly, satisfy users consistently, and speak the language people actually use when they ask for help. Your job is to engineer those signals through metadata, creatives, onboarding, sentiment, and coherent acquisition so that ranking models find, understand, and recommend you. If you reframe ASO as signal engineering for intelligent systems, your 90-day roadmap writes itself: clarify the promise, compress time-to-value, tune creatives for comprehension, mine reviews for language, localize for culture, and keep your experiments rolling. Do this, and discovery won’t just be something that happens to you it will be a capability you own.

Frequently Asked Questions (FAQ)

What is “Intelligent Discovery” in ASO?

Intelligent discovery refers to how app stores now use advanced machine learning, personalization, and entity recognition to recommend and rank apps. Instead of relying solely on keywords and install velocity, they analyze behavioral quality, review sentiment, creatives, and user intent to decide which apps to surface.

Is keyword optimization still important in the future of ASO?

Yes, but it’s no longer enough by itself. Keywords now work best when grouped into intent families that align with how people ask for solutions. You must combine keyword optimization with strong behavioral signals, high-quality creatives, and clear onboarding.

How do behavioral signals influence app rankings?

Behavioral signals include metrics like retention rates, uninstall rates, session length, core action completion, and engagement depth. If users install your app but don’t activate or retain, your ranking potential drops because intelligent systems assume poor relevance.

How does personalization affect ASO strategy?

Personalization means two users searching the same phrase may see different results based on their device type, app history, location, and predicted interests. This requires tight audience targeting so that people you acquire look like people who keep using your app.

What role do app creatives play in intelligent discovery?

Creatives—icons, screenshots, and videos must not only appeal to humans but also be machine-readable. Models analyze imagery, text overlays, and visual themes to understand your app’s category, benefits, and audience fit. Clear, outcome-first designs improve both human conversion and algorithmic comprehension.

How can reviews and ratings impact ASO in this new era?

Stores now weigh review sentiment velocity (how quickly positive reviews grow) and recency alongside average rating. Recent negative reviews or sentiment drops can quickly lower visibility. Actively prompting happy users and resolving issues before they reach the store is crucial.

How do generative AI features inside app stores change ASO?

Generative AI can summarize app features, extract common themes from reviews, and create curated lists for specific needs. If your metadata and reviews are clear and benefit-driven, these AI summaries will represent your app more accurately and attract better-matched users.

How often should ASO elements be updated in the intelligent discovery era?

Ideally, every 2–4 weeks per locale. Frequent small updates to creatives, copy, and metadata signal freshness, help capture emerging intents, and maintain ranking eligibility in competitive clusters.

Does localization mean just translating app store text?

No. Localization in intelligent discovery means adapting messaging, visuals, and value propositions to cultural preferences. For example, emphasizing “offline mode” in regions with low connectivity or highlighting “privacy” in markets with high data security awareness.

What metrics should I track for modern ASO success?

Key metrics include:

-

STAR (Store Traffic Activation Rate): % of store-sourced installs that complete a key action within 24 hours.

-

RSM (Review Sentiment Momentum): Recent positivity and velocity of ratings.

-

CCI (Cohort Coherence Index): Consistency of retention across acquisition sources.

-

Conversion rate: View-to-install ratio in each locale.

-

Retention curves: Especially Day 1 and Day 7 from store cohorts.

How does paid UA impact ASO rankings now?

Poorly targeted paid users who churn quickly hurt your behavioral signals. Highly targeted paid UA that delivers engaged users can improve cohort coherence and indirectly boost your organic rankings and recommendation visibility.

What’s the biggest mistake ASO teams make in the intelligent discovery era?

Focusing only on keywords and creatives while ignoring retention, onboarding, and review sentiment. Intelligent systems reward end-to-end satisfaction, not just installs